GPUd is designed to ensure GPU efficiency and reliability by actively monitoring GPUs and effectively managing AI/ML workloads.

GPUd is built on years of experience operating large-scale GPU clusters at Meta, Alibaba Cloud, Uber, and Lepton AI. It is carefully designed to be self-contained and to integrate seamlessly with other systems such as Docker, containerd, Kubernetes, and Nvidia ecosystems.

- First-class GPU support: GPUd is GPU-centric, providing a unified view of critical GPU metrics and issues.

- Easy to run at scale: GPUd is a self-contained binary that runs on any machine with a low footprint.

- Production grade: GPUd is used in Lepton AI's production infrastructure.

Most importantly, GPUd operates with minimal CPU and memory overhead in a non-critical path and requires only read-only operations. See architecture for more details.

The fastest way to see gpud in action is to watch our 40-second demo video below. For more detailed guides, see our Tutorials page.

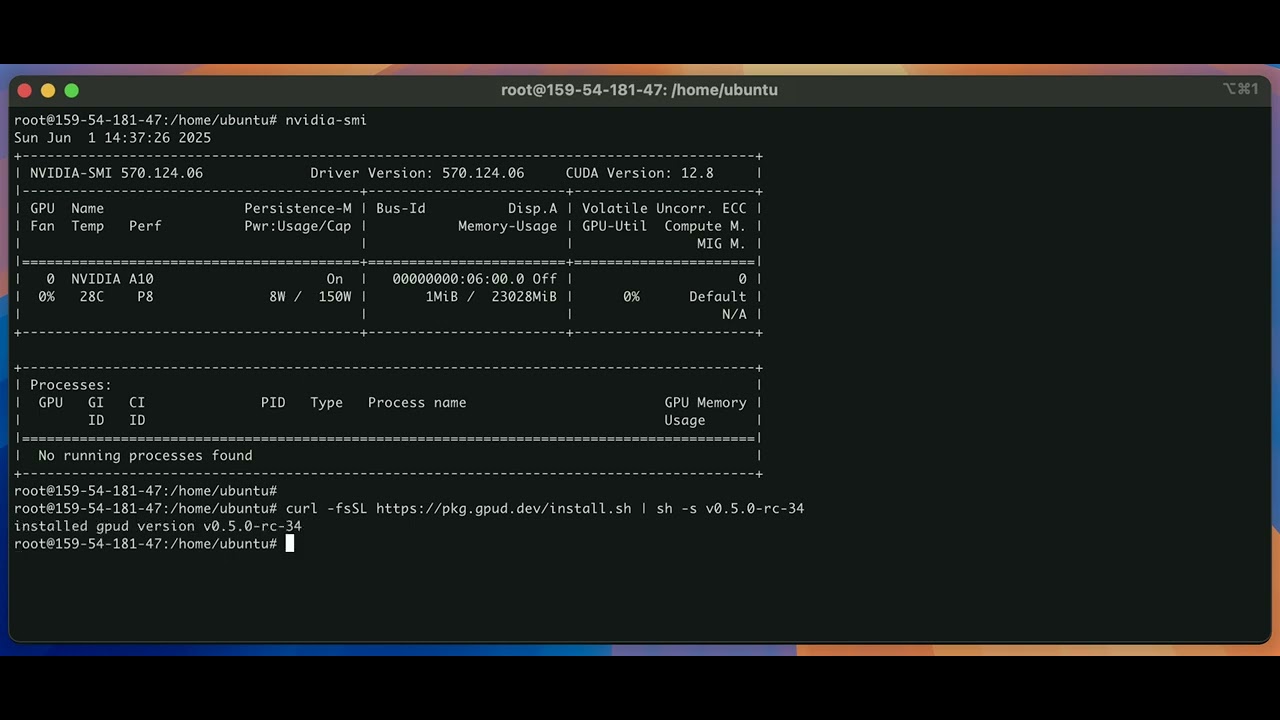

To install from the official release on Linux amd64 (x86_64) machine:

curl -fsSL https://pkg.gpud.dev/install.sh | shTo specify a version:

curl -fsSL https://pkg.gpud.dev/install.sh | sh -s v0.6.0Note that the install script does not currenlty support other architectures (e.g., arm64) and OSes (e.g., macOS).

This section covers running gpud directly on a host machine.

Start the service:

sudo gpud up [--token <LEPTON_AI_TOKEN>]Note: The optional

--tokenconnectsgpudto the Lepton Platform. You can get a token from the Settings > Tokens page on your dashboard. You can then view your machine's status on the self-managed nodes dashboard.

Stop the service:

sudo gpud downUninstall:

sudo rm /usr/local/bin/gpud

sudo rm /etc/systemd/system/gpud.serviceRun in the foreground:

gpud run [--token <LEPTON_AI_TOKEN>]Run in the background:

nohup sudo /usr/local/bin/gpud run [--token <LEPTON_AI_TOKEN>] &>> <your_log_file_path> &Uninstall:

sudo rm /usr/local/bin/gpudThe recommended way to deploy GPUd on Kubernetes is with our official Helm chart.

A Dockerfile is provided to build a container image from source. For complete instructions, please see our Docker guide in CONTRIBUTING.md.

- Monitor critical GPU and GPU fabric metrics (power, temperature).

- Reports GPU and GPU fabric status (nvidia-smi parser, error checking).

- Detects critical GPU and GPU fabric errors (kmsg, hardware slowdown, NVML Xid event, DCGM).

- Monitor overall system metrics (CPU, memory, disk).

Check out components for a detailed list of components and their features.

For users looking to set up a platform to collect and process data from gpud, please refer to INTEGRATION.

GPUd collects a small anonymous usage signal by default to help the engineering team better understand usage frequencies. The data is strictly anonymized and does not contain any sensitive data. You can disable this behavior by setting GPUD_NO_USAGE_STATS=true. If GPUd is run with systemd (default option for the gpud up command), you can add the line GPUD_NO_USAGE_STATS=true to the /etc/default/gpud environment file and restart the service.

If you opt-in to log in to the Lepton AI platform, to assist you with more helpful GPU health states, GPUd periodically sends system runtime related information about the host to the platform. All these info are system workload and health info, and contain no user data. The data are sent via secure channels.

GPUd is still in active development, regularly releasing new versions for critical bug fixes and new features. We strongly recommend always being on the latest version of GPUd.

When GPUd is registered with the Lepton platform, the platform will automatically update GPUd to the latest version. To disable such auto-updates, if GPUd is run with systemd (default option for the gpud up command), you may add the flag FLAGS="--enable-auto-update=false" to the /etc/default/gpud environment file and restart the service.

Please see the CONTRIBUTING.md for guidelines on how to contribute to this project.