-

Notifications

You must be signed in to change notification settings - Fork 3.4k

Closed

Labels

ci/flakeThis is a known failure that occurs in the tree. Please investigate me!This is a known failure that occurs in the tree. Please investigate me!

Description

Test Name

K8sDatapathConfig MonitorAggregation Checks that monitor aggregation restricts notifications

Failure Output

FAIL: Found 1 k8s-app=cilium logs matching list of errors that must be investigated:

Stacktrace

Click to show.

/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9/src/github.com/cilium/cilium/test/ginkgo-ext/scopes.go:427

Found 1 k8s-app=cilium logs matching list of errors that must be investigated:

level=error

/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9/src/github.com/cilium/cilium/test/ginkgo-ext/scopes.go:425

Standard Output

Click to show.

Number of "context deadline exceeded" in logs: 0

Number of "level=error" in logs: 0

Number of "level=warning" in logs: 0

Number of "Cilium API handler panicked" in logs: 0

Number of "Goroutine took lock for more than" in logs: 0

No errors/warnings found in logs

Number of "context deadline exceeded" in logs: 0

Number of "level=error" in logs: 0

Number of "level=warning" in logs: 1

Number of "Cilium API handler panicked" in logs: 0

Number of "Goroutine took lock for more than" in logs: 0

Top 1 errors/warnings:

Network status error received, restarting client connections

⚠️ Found "level=error" in logs 1 times

Number of "context deadline exceeded" in logs: 0

Number of "level=error" in logs: 1

Number of "level=warning" in logs: 2

Number of "Cilium API handler panicked" in logs: 0

Number of "Goroutine took lock for more than" in logs: 0

Top 2 errors/warnings:

Waiting for k8s node information

Cannot create CEP

Cilium pods: [cilium-d6lj4 cilium-nrcwg]

Netpols loaded:

CiliumNetworkPolicies loaded: 202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito::l3-policy-demo

Endpoint Policy Enforcement:

Pod Ingress Egress

testclient-2-q4mjg false false

testclient-84hqb false false

testds-98856 false false

grafana-7fd557d749-f5k7b false false

prometheus-d87f8f984-whplh false false

test-k8s2-5b756fd6c5-52fz4 false false

testclient-2dm6l false false

testds-c8h9w false false

coredns-8cfc78c54-hxspl false false

testclient-2-bjf2p false false

Cilium agent 'cilium-d6lj4': Status: Ok Health: Ok Nodes "" ContainerRuntime: Kubernetes: Ok KVstore: Ok Controllers: Total 31 Failed 0

Cilium agent 'cilium-nrcwg': Status: Ok Health: Ok Nodes "" ContainerRuntime: Kubernetes: Ok KVstore: Ok Controllers: Total 47 Failed 0

Standard Error

Click to show.

06:07:55 STEP: Running BeforeAll block for EntireTestsuite

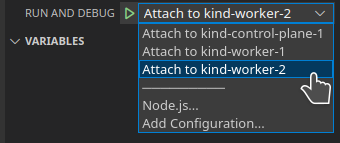

06:07:55 STEP: Starting tests: command line parameters: {Reprovision:false HoldEnvironment:false PassCLIEnvironment:true SSHConfig: ShowCommands:false TestScope: SkipLogGathering:false CiliumImage:quay.io/cilium/cilium-ci CiliumTag:5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb CiliumOperatorImage:quay.io/cilium/operator CiliumOperatorTag:5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb CiliumOperatorSuffix:-ci HubbleRelayImage:quay.io/cilium/hubble-relay-ci HubbleRelayTag:5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb ProvisionK8s:true Timeout:2h50m0s Kubeconfig:/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9/src/github.com/cilium/cilium/test/vagrant-kubeconfig KubectlPath:/tmp/kubectl RegistryCredentials: Multinode:true RunQuarantined:false Help:false} environment variables: [JENKINS_HOME=/var/jenkins_home ghprbSourceBranch=pr/joe/kind-debug VM_MEMORY=8192 MAIL=/var/mail/root SSH_CLIENT=54.148.123.155 35558 22 USER=root PROJ_PATH=src/github.com/cilium/cilium RUN_CHANGES_DISPLAY_URL=https://jenkins.cilium.io/job/Cilium-PR-K8s-1.16-kernel-4.9/2498/display/redirect?page=changes ghprbPullDescription=GitHub pull request #21108 of commit 5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb, no merge conflicts. NETNEXT=0 ghprbActualCommit=5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb SHLVL=1 CILIUM_TAG=5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb NODE_LABELS=baremetal ginkgo nightly node-model-starfish vagrant HUDSON_URL=https://jenkins.cilium.io/ GIT_COMMIT=b16cb37905c923b37a6e53029115459630df6c04 OLDPWD=/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9 GINKGO_TIMEOUT=170m HOME=/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9 ghprbTriggerAuthorLoginMention=@joestringer BUILD_URL=https://jenkins.cilium.io/job/Cilium-PR-K8s-1.16-kernel-4.9/2498/ ghprbPullAuthorLoginMention=@joestringer HUDSON_COOKIE=5597d960-fdb5-42af-9a78-21321b5dc16b JENKINS_SERVER_COOKIE=durable-0c71c7690bbf1f2abc72be868014b880 ghprbGhRepository=cilium/cilium DOCKER_TAG=5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb JobKernelVersion=49 DBUS_SESSION_BUS_ADDRESS=unix:path=/run/user/0/bus KERNEL=49 CONTAINER_RUNTIME=docker WORKSPACE=/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9 ghprbPullLongDescription=Review commit by commit.\r\n\r\nA new `make kind-image-debug` target wraps the Cilium image with a dlv debugger wrapper,\r\nallowing live debug of the container image after you deploy it.\r\n\r\nUsage:\r\n* `make kind-debug-agent`\r\n* attach the debugger to the target node on port 2345 and proceed\r\n * If you are using vscode then the .vscode/launch.json will automatically provide attach targets in your vscode debugger UI. Just pick your node target:\r\n \r\n\r\nNote that the Cilium pods will pause immediately on startup until you\r\nhave connected the debugger.\r\n\r\nThis PR exposes the debugger port for cilium-agent on each kind node as well, which is enabled by loading an image using `make kind-image-debug`.\r\n\r\nPort allocations:\r\n- 23401: First control plane node\r\n- 2340*: Subsequent kind-control-plane nodes (if defined)\r\n- 23411: First kind-worker node\r\n- 2341*: Subsequent kind-worker nodes (if defined)\r\n\r\nDelve should be listening on this port with multiprotocol support, so any IDEs or debugger frontends that understand either DAP or delve API v2 should be compatible with this approach. K8S_NODES=2 TESTDIR=/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9/src/github.com/cilium/cilium/test LOGNAME=root NODE_NAME=node-model-starfish ghprbCredentialsId=ciliumbot _=/usr/bin/java HUBBLE_RELAY_IMAGE=quay.io/cilium/hubble-relay-ci STAGE_NAME=BDD-Test-PR GIT_BRANCH=origin/pr/21108/merge EXECUTOR_NUMBER=0 ghprbTriggerAuthorLogin=joestringer TERM=xterm XDG_SESSION_ID=4 HOST_FIREWALL=0 CILIUM_OPERATOR_TAG=5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb BUILD_DISPLAY_NAME=Add Cilium debugger images and default debugging configuration for kind, vscode https://github.com/cilium/cilium/pull/21108 #2498 ghprbPullAuthorLogin=joestringer HUDSON_HOME=/var/jenkins_home ghprbTriggerAuthor=Joe Stringer JOB_BASE_NAME=Cilium-PR-K8s-1.16-kernel-4.9 PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/usr/local/go/bin:/root/go/bin sha1=origin/pr/21108/merge KUBECONFIG=/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9/src/github.com/cilium/cilium/test/vagrant-kubeconfig FOCUS=K8s BUILD_ID=2498 XDG_RUNTIME_DIR=/run/user/0 BUILD_TAG=jenkins-Cilium-PR-K8s-1.16-kernel-4.9-2498 RUN_QUARANTINED=false CILIUM_IMAGE=quay.io/cilium/cilium-ci JENKINS_URL=https://jenkins.cilium.io/ LANG=C.UTF-8 ghprbCommentBody=/test JOB_URL=https://jenkins.cilium.io/job/Cilium-PR-K8s-1.16-kernel-4.9/ ghprbPullTitle=Add Cilium debugger images and default debugging configuration for kind, vscode GIT_URL=https://github.com/cilium/cilium ghprbPullLink=https://github.com/cilium/cilium/pull/21108 BUILD_NUMBER=2498 JENKINS_NODE_COOKIE=de179ec8-a023-40fc-af16-d4d8a99d50e0 SHELL=/bin/bash GOPATH=/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9 RUN_DISPLAY_URL=https://jenkins.cilium.io/job/Cilium-PR-K8s-1.16-kernel-4.9/2498/display/redirect IMAGE_REGISTRY=quay.io/cilium ghprbAuthorRepoGitUrl=https://github.com/cilium/cilium.git FAILFAST=false HUDSON_SERVER_COOKIE=693c250bfb7e85bf ghprbTargetBranch=master JOB_DISPLAY_URL=https://jenkins.cilium.io/job/Cilium-PR-K8s-1.16-kernel-4.9/display/redirect K8S_VERSION=1.16 JOB_NAME=Cilium-PR-K8s-1.16-kernel-4.9 PWD=/home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9/src/github.com/cilium/cilium/test SSH_CONNECTION=54.148.123.155 35558 145.40.77.155 22 ghprbPullId=21108 CILIUM_OPERATOR_IMAGE=quay.io/cilium/operator HUBBLE_RELAY_TAG=5f61c9b6aa2c47d5e7a2c18475dc9dc909d792eb JobK8sVersion=1.16 VM_CPUS=3 CILIUM_OPERATOR_SUFFIX=-ci]

06:07:55 STEP: Ensuring the namespace kube-system exists

06:07:55 STEP: WaitforPods(namespace="kube-system", filter="-l k8s-app=cilium-test-logs")

06:07:57 STEP: WaitforPods(namespace="kube-system", filter="-l k8s-app=cilium-test-logs") => <nil>

06:07:57 STEP: Preparing cluster

06:07:58 STEP: Labelling nodes

06:07:58 STEP: Cleaning up Cilium components

06:07:58 STEP: Running BeforeAll block for EntireTestsuite K8sDatapathConfig

06:07:58 STEP: Ensuring the namespace kube-system exists

06:07:58 STEP: WaitforPods(namespace="kube-system", filter="-l k8s-app=cilium-test-logs")

06:07:58 STEP: WaitforPods(namespace="kube-system", filter="-l k8s-app=cilium-test-logs") => <nil>

06:07:58 STEP: Installing Cilium

06:07:59 STEP: Waiting for Cilium to become ready

06:08:36 STEP: Restarting unmanaged pods coredns-8cfc78c54-hd9xn in namespace kube-system

06:08:58 STEP: Validating if Kubernetes DNS is deployed

06:08:58 STEP: Checking if deployment is ready

06:08:59 STEP: Kubernetes DNS is not ready: only 0 of 1 replicas are available

06:08:59 STEP: Restarting Kubernetes DNS (-l k8s-app=kube-dns)

06:08:59 STEP: Waiting for Kubernetes DNS to become operational

06:08:59 STEP: Checking if deployment is ready

06:08:59 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:00 STEP: Checking if deployment is ready

06:09:00 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:01 STEP: Checking if deployment is ready

06:09:01 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:02 STEP: Checking if deployment is ready

06:09:02 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:03 STEP: Checking if deployment is ready

06:09:03 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:04 STEP: Checking if deployment is ready

06:09:04 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:05 STEP: Checking if deployment is ready

06:09:05 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:06 STEP: Checking if deployment is ready

06:09:06 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:07 STEP: Checking if deployment is ready

06:09:07 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:08 STEP: Checking if deployment is ready

06:09:08 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:09 STEP: Checking if deployment is ready

06:09:09 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:10 STEP: Checking if deployment is ready

06:09:10 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:11 STEP: Checking if deployment is ready

06:09:11 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:12 STEP: Checking if deployment is ready

06:09:12 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:13 STEP: Checking if deployment is ready

06:09:13 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:14 STEP: Checking if deployment is ready

06:09:14 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:15 STEP: Checking if deployment is ready

06:09:15 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:16 STEP: Checking if deployment is ready

06:09:16 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:17 STEP: Checking if deployment is ready

06:09:17 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:18 STEP: Checking if deployment is ready

06:09:18 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:19 STEP: Checking if deployment is ready

06:09:19 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:20 STEP: Checking if deployment is ready

06:09:20 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:21 STEP: Checking if deployment is ready

06:09:21 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:22 STEP: Checking if deployment is ready

06:09:22 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:23 STEP: Checking if deployment is ready

06:09:23 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:24 STEP: Checking if deployment is ready

06:09:24 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:25 STEP: Checking if deployment is ready

06:09:25 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:26 STEP: Checking if deployment is ready

06:09:26 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:27 STEP: Checking if deployment is ready

06:09:27 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:28 STEP: Checking if deployment is ready

06:09:28 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:29 STEP: Checking if deployment is ready

06:09:29 STEP: Kubernetes DNS is not ready yet: only 0 of 1 replicas are available

06:09:30 STEP: Checking if deployment is ready

06:09:30 STEP: Checking if kube-dns service is plumbed correctly

06:09:30 STEP: Checking if pods have identity

06:09:30 STEP: Checking if DNS can resolve

06:09:30 STEP: Validating Cilium Installation

06:09:30 STEP: Performing Cilium controllers preflight check

06:09:30 STEP: Performing Cilium status preflight check

06:09:30 STEP: Performing Cilium health check

06:09:30 STEP: Checking whether host EP regenerated

06:09:31 STEP: Performing Cilium service preflight check

06:09:31 STEP: Performing K8s service preflight check

06:09:33 STEP: Waiting for cilium-operator to be ready

06:09:33 STEP: WaitforPods(namespace="kube-system", filter="-l name=cilium-operator")

06:09:33 STEP: WaitforPods(namespace="kube-system", filter="-l name=cilium-operator") => <nil>

06:09:33 STEP: Making sure all endpoints are in ready state

06:09:34 STEP: Launching cilium monitor on "cilium-d6lj4"

06:09:34 STEP: Creating namespace 202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

06:09:34 STEP: Deploying demo_ds.yaml in namespace 202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

06:09:35 STEP: Applying policy /home/jenkins/workspace/Cilium-PR-K8s-1.16-kernel-4.9/src/github.com/cilium/cilium/test/k8s/manifests/l3-policy-demo.yaml

06:09:39 STEP: Waiting for 4m0s for 5 pods of deployment demo_ds.yaml to become ready

06:09:39 STEP: WaitforNPods(namespace="202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito", filter="")

06:09:39 STEP: WaitforNPods(namespace="202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito", filter="") => <nil>

06:09:39 STEP: Checking pod connectivity between nodes

06:09:39 STEP: WaitforPods(namespace="202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito", filter="-l zgroup=testDSClient")

06:09:39 STEP: WaitforPods(namespace="202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito", filter="-l zgroup=testDSClient") => <nil>

06:09:39 STEP: WaitforPods(namespace="202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito", filter="-l zgroup=testDS")

06:09:44 STEP: WaitforPods(namespace="202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito", filter="-l zgroup=testDS") => <nil>

06:09:49 STEP: Checking that ICMP notifications in egress direction were observed

06:09:49 STEP: Checking that ICMP notifications in ingress direction were observed

06:09:49 STEP: Checking the set of TCP notifications received matches expectations

06:09:49 STEP: Looking for TCP notifications using the ephemeral port "45572"

=== Test Finished at 2022-09-01T06:09:49Z====

06:09:49 STEP: Running JustAfterEach block for EntireTestsuite K8sDatapathConfig

FAIL: Found 1 k8s-app=cilium logs matching list of errors that must be investigated:

level=error

===================== TEST FAILED =====================

06:09:49 STEP: Running AfterFailed block for EntireTestsuite K8sDatapathConfig

cmd: kubectl get pods -o wide --all-namespaces

Exitcode: 0

Stdout:

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito test-k8s2-5b756fd6c5-52fz4 2/2 Running 0 15s 10.0.1.92 k8s2 <none> <none>

202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito testclient-2-bjf2p 1/1 Running 0 15s 10.0.1.7 k8s2 <none> <none>

202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito testclient-2-q4mjg 1/1 Running 0 15s 10.0.0.112 k8s1 <none> <none>

202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito testclient-2dm6l 1/1 Running 0 15s 10.0.0.200 k8s1 <none> <none>

202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito testclient-84hqb 1/1 Running 0 15s 10.0.1.149 k8s2 <none> <none>

202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito testds-98856 2/2 Running 0 15s 10.0.0.159 k8s1 <none> <none>

202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito testds-c8h9w 2/2 Running 0 15s 10.0.1.26 k8s2 <none> <none>

cilium-monitoring grafana-7fd557d749-f5k7b 1/1 Running 0 112s 10.0.1.50 k8s2 <none> <none>

cilium-monitoring prometheus-d87f8f984-whplh 1/1 Running 0 112s 10.0.1.6 k8s2 <none> <none>

kube-system cilium-d6lj4 1/1 Running 0 111s 192.168.56.11 k8s1 <none> <none>

kube-system cilium-nrcwg 1/1 Running 0 111s 192.168.56.12 k8s2 <none> <none>

kube-system cilium-operator-76b5cbf88d-9qcl7 1/1 Running 0 111s 192.168.56.12 k8s2 <none> <none>

kube-system cilium-operator-76b5cbf88d-hrvzl 1/1 Running 1 111s 192.168.56.11 k8s1 <none> <none>

kube-system coredns-8cfc78c54-hxspl 1/1 Running 0 74s 10.0.1.126 k8s2 <none> <none>

kube-system etcd-k8s1 1/1 Running 0 4m55s 192.168.56.11 k8s1 <none> <none>

kube-system kube-apiserver-k8s1 1/1 Running 0 4m49s 192.168.56.11 k8s1 <none> <none>

kube-system kube-controller-manager-k8s1 1/1 Running 2 4m37s 192.168.56.11 k8s1 <none> <none>

kube-system kube-proxy-5rqnt 1/1 Running 0 2m30s 192.168.56.12 k8s2 <none> <none>

kube-system kube-proxy-g674j 1/1 Running 0 5m19s 192.168.56.11 k8s1 <none> <none>

kube-system kube-scheduler-k8s1 1/1 Running 2 4m37s 192.168.56.11 k8s1 <none> <none>

kube-system log-gatherer-b6jrx 1/1 Running 0 115s 192.168.56.11 k8s1 <none> <none>

kube-system log-gatherer-cs9dr 1/1 Running 0 115s 192.168.56.12 k8s2 <none> <none>

kube-system registry-adder-72vbx 1/1 Running 0 2m27s 192.168.56.12 k8s2 <none> <none>

kube-system registry-adder-s59ph 1/1 Running 0 2m27s 192.168.56.11 k8s1 <none> <none>

Stderr:

Fetching command output from pods [cilium-d6lj4 cilium-nrcwg]

cmd: kubectl exec -n kube-system cilium-d6lj4 -c cilium-agent -- cilium status

Exitcode: 0

Stdout:

KVStore: Ok Disabled

Kubernetes: Ok 1.16 (v1.16.15) [linux/amd64]

Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Endpoint", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: Disabled

Host firewall: Disabled

CNI Chaining: none

Cilium: Ok 1.12.90 (v1.12.90-5f61c9b6)

NodeMonitor: Listening for events on 3 CPUs with 64x4096 of shared memory

Cilium health daemon: Ok

IPAM: IPv4: 5/254 allocated from 10.0.0.0/24, IPv6: 5/254 allocated from fd02::/120

IPv6 BIG TCP: Disabled

BandwidthManager: Disabled

Host Routing: Legacy

Masquerading: IPTables [IPv4: Enabled, IPv6: Enabled]

Controller Status: 31/31 healthy

Proxy Status: OK, ip 10.0.0.92, 0 redirects active on ports 10000-20000

Global Identity Range: min 256, max 65535

Hubble: Ok Current/Max Flows: 243/65535 (0.37%), Flows/s: 1.38 Metrics: Disabled

Encryption: Disabled

Cluster health: 2/2 reachable (2022-09-01T06:09:32Z)

Stderr:

cmd: kubectl exec -n kube-system cilium-d6lj4 -c cilium-agent -- cilium endpoint list

Exitcode: 0

Stdout:

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

66 Enabled Disabled 41317 k8s:io.cilium.k8s.policy.cluster=default fd02::18 10.0.0.159 ready

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

k8s:zgroup=testDS

975 Disabled Disabled 1 k8s:cilium.io/ci-node=k8s1 ready

k8s:node-role.kubernetes.io/master

reserved:host

1748 Disabled Disabled 4 reserved:health fd02::2 10.0.0.147 ready

4076 Disabled Disabled 52810 k8s:io.cilium.k8s.policy.cluster=default fd02::61 10.0.0.200 ready

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

k8s:zgroup=testDSClient

4090 Disabled Disabled 4469 k8s:io.cilium.k8s.policy.cluster=default fd02::50 10.0.0.112 ready

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

k8s:zgroup=testDSClient2

Stderr:

cmd: kubectl exec -n kube-system cilium-nrcwg -c cilium-agent -- cilium status

Exitcode: 0

Stdout:

KVStore: Ok Disabled

Kubernetes: Ok 1.16 (v1.16.15) [linux/amd64]

Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Endpoint", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: Disabled

Host firewall: Disabled

CNI Chaining: none

Cilium: Ok 1.12.90 (v1.12.90-5f61c9b6)

NodeMonitor: Listening for events on 3 CPUs with 64x4096 of shared memory

Cilium health daemon: Ok

IPAM: IPv4: 9/254 allocated from 10.0.1.0/24, IPv6: 9/254 allocated from fd02::100/120

IPv6 BIG TCP: Disabled

BandwidthManager: Disabled

Host Routing: Legacy

Masquerading: IPTables [IPv4: Enabled, IPv6: Enabled]

Controller Status: 47/47 healthy

Proxy Status: OK, ip 10.0.1.74, 0 redirects active on ports 10000-20000

Global Identity Range: min 256, max 65535

Hubble: Ok Current/Max Flows: 423/65535 (0.65%), Flows/s: 3.04 Metrics: Disabled

Encryption: Disabled

Cluster health: 2/2 reachable (2022-09-01T06:09:33Z)

Stderr:

cmd: kubectl exec -n kube-system cilium-nrcwg -c cilium-agent -- cilium endpoint list

Exitcode: 0

Stdout:

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

134 Disabled Disabled 1 k8s:cilium.io/ci-node=k8s2 ready

reserved:host

597 Disabled Disabled 27124 k8s:io.cilium.k8s.policy.cluster=default fd02::14f 10.0.1.126 ready

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

701 Disabled Disabled 15439 k8s:io.cilium.k8s.policy.cluster=default fd02::1b2 10.0.1.92 ready

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

k8s:zgroup=test-k8s2

774 Disabled Disabled 37100 k8s:app=prometheus fd02::1a5 10.0.1.6 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=prometheus-k8s

k8s:io.kubernetes.pod.namespace=cilium-monitoring

1676 Disabled Disabled 31028 k8s:app=grafana fd02::1e6 10.0.1.50 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=cilium-monitoring

2227 Disabled Disabled 4469 k8s:io.cilium.k8s.policy.cluster=default fd02::18d 10.0.1.7 ready

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

k8s:zgroup=testDSClient2

2494 Disabled Disabled 4 reserved:health fd02::19f 10.0.1.220 ready

2732 Disabled Disabled 52810 k8s:io.cilium.k8s.policy.cluster=default fd02::112 10.0.1.149 ready

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

k8s:zgroup=testDSClient

2808 Enabled Disabled 41317 k8s:io.cilium.k8s.policy.cluster=default fd02::120 10.0.1.26 ready

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

k8s:zgroup=testDS

Stderr:

===================== Exiting AfterFailed =====================

06:09:58 STEP: Running AfterEach for block EntireTestsuite K8sDatapathConfig

06:09:58 STEP: Deleting deployment demo_ds.yaml

06:09:59 STEP: Deleting namespace 202209010609k8sdatapathconfigmonitoraggregationchecksthatmonito

06:10:14 STEP: Running AfterEach for block EntireTestsuite

[[ATTACHMENT|e8a40328_K8sDatapathConfig_MonitorAggregation_Checks_that_monitor_aggregation_restricts_notifications.zip]]

ZIP Links:

Click to show.

https://jenkins.cilium.io/job/Cilium-PR-K8s-1.16-kernel-4.9//2498/artifact/e8a40328_K8sDatapathConfig_MonitorAggregation_Checks_that_monitor_aggregation_restricts_notifications.zip

https://jenkins.cilium.io/job/Cilium-PR-K8s-1.16-kernel-4.9//2498/artifact/test_results_Cilium-PR-K8s-1.16-kernel-4.9_2498_BDD-Test-PR.zip

Jenkins URL: https://jenkins.cilium.io/job/Cilium-PR-K8s-1.16-kernel-4.9/2498/

If this is a duplicate of an existing flake, comment 'Duplicate of #<issue-number>' and close this issue.

Metadata

Metadata

Assignees

Labels

ci/flakeThis is a known failure that occurs in the tree. Please investigate me!This is a known failure that occurs in the tree. Please investigate me!