AI Agents Will Crash in 2025, Unless They Are Fixed Immediately

Data is the fuel, AI is the engine, and agents are the aircraft.

The AI agent revolution is in full throttle. Companies are betting billions of dollars on AI-driven assistants, copilots, and automation tools that promise to change how we work and make decisions.

However, a critical flaw could cause AI agents to crash and burn in 2025. What’s the issue? The data fueling them. No matter how powerful an AI agent is, bad data will stall it mid-flight. You might not notice at first. Your AI agent might seem to work fine until it starts hallucinating and giving wrong insights or even making costly business decisions based on incorrect data.

The scariest part: most companies don’t realize it’s happening.

The looming crash

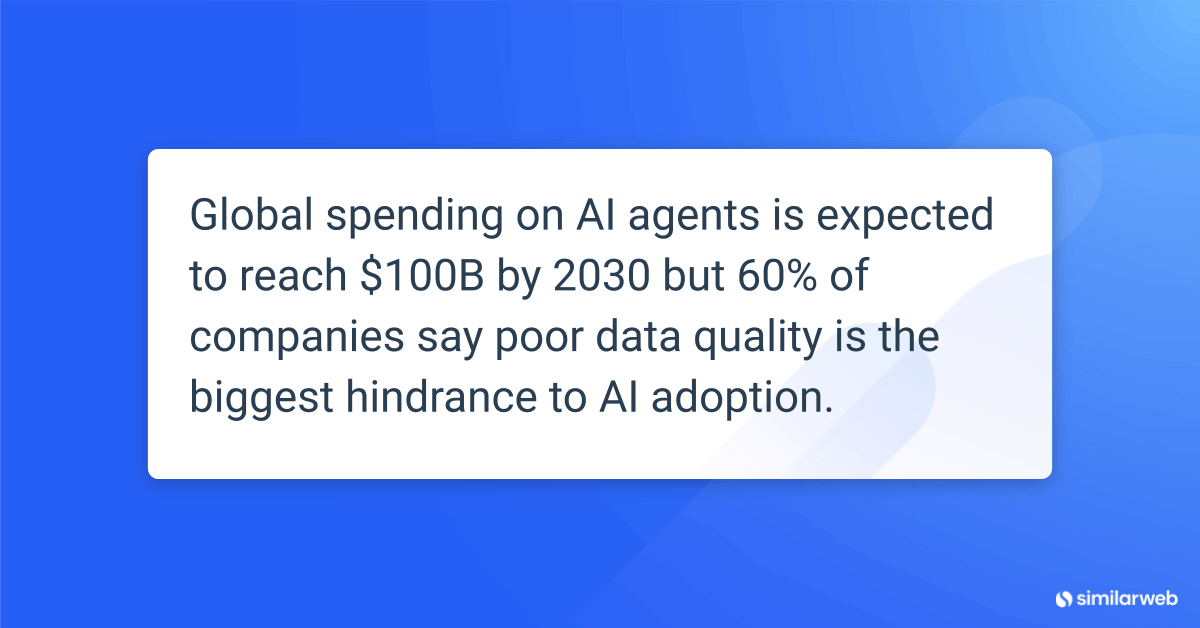

According to an article in Engineering News, by 2030, global spending on AI agents is expected to reach $100+ billion. Yet today:

- More than 60% of companies report that poor data quality is their biggest barrier to AI adoption

- The remaining 40% have the same problem, they just don’t know it yet

So, what happens when AI agents become even more deeply embedded in our workflows with unreliable data?

- What if an AI sales agent sent an introductory outreach email to an existing client because its training data was outdated

- Imagine an AI financial agent making investment choices based on biased, low-quality market signals

- Suppose an AI SEO agent only wrote amazing content praising your competitors

So what’s the crash? Having faulty AI agents implemented company-wide, across all industries, spreading wrong information because of bad data.

Data is the fuel

Think of your AI agent as a supersonic jet. It’s engineered for speed, precision, and optimized performance, allowing businesses to adapt strategies and make key real-time decisions.

But there’s one non-negotiable requirement: fuel quality. Bad fuel, and you can’t take off.

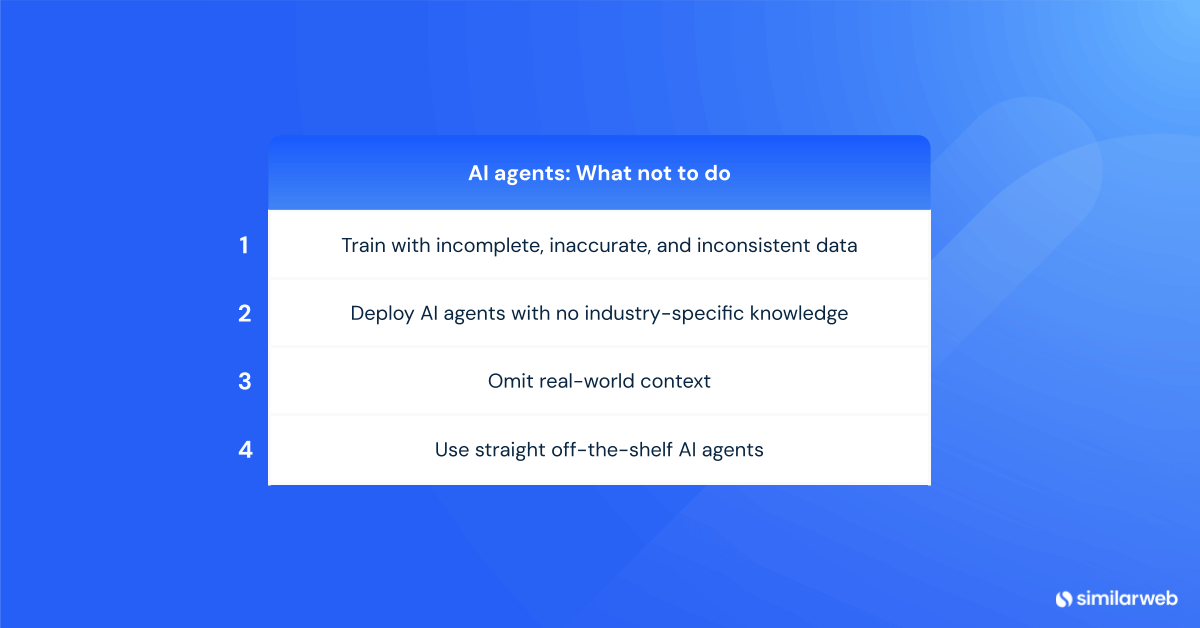

Your AI agent’s fuel is data. If that data is:

- Incomplete → The agent operates on half-truths

- Inaccurate → The agent confidently delivers wrong answers

- Inconsistency → The agent gets unpredictable or contradictory results, which often go unnoticed

It’s not about first-party vs. third-party data because you need both. It’s about how well that data is cleaned, structured, and interpreted.

Good agents don’t just need data. They need the right data in the right context at the right time.

AI is the engine

The AI engine driving agents are trained on human decision-making patterns. This can be incredibly powerful but also replicate human errors, biases, and inconsistencies. Every AI data scientist, engineer, and analyst does things differently, so it won’t operate as expected unless the AI engine is properly tuned and aligned to fly.

One common misconception companies have: “We can just fix it with infrastructure.”

This is wrong. AI infrastructure is critical, but it doesn’t fix bad data.

Treat your engine well. Make sure it’s the right engine for takeoff and landing, fueled with the right data. Confirm it has the right infrastructure to reach the right destination on time and on the correct runway.

Agents are the aircraft

I like aircraft; they’re truly magnificent in the air. No matter if you fly a transport plane carrying hundreds of people and tons of weight or a helicopter flying low and fast to rescue people. Every aircraft has its specialization. You wouldn’t send a commercial airliner on a suborbital spaceflight, just like you shouldn’t expect a general-purpose AI agent to handle everything.

But that’s just what companies are doing today:

- Deploying AI agents with no industry-specific knowledge

- Ignoring real-world context in AI decision-making

- Assuming AI agents work straight out of the box with no customization or fine-tuning

If an AI agent doesn’t understand your business, it will make surface-level decisions that could hurt more than help.

Large language models (LLMs) need context and knowledge. Without it, the LLM only provides general, high-level answers. The same goes for agents, who, if you didn’t know them, are mostly built on LLMs. This means they need to be the ultimate SEO expert with the deepest knowledge, and, most importantly, they need to understand YOUR context.

I’ve said it before: just as all humans are different, so are LLMs. Make sure you train them on your context, knowledge, and know-how.

Context matters. Specialization matters.

Pre- and post-flight evaluations

One of the least talked about but extremely important aspects of air travel is taking care of the aircraft. The pre-flight crew is crucial to ensure a safe flight. As soon as a plane lands, they make sure the plane is in top form, checking all systems and analyzing what’s working, what needs fine-tuning, and what needs fixing. The same goes for agents.

How to avoid crashing in 2025

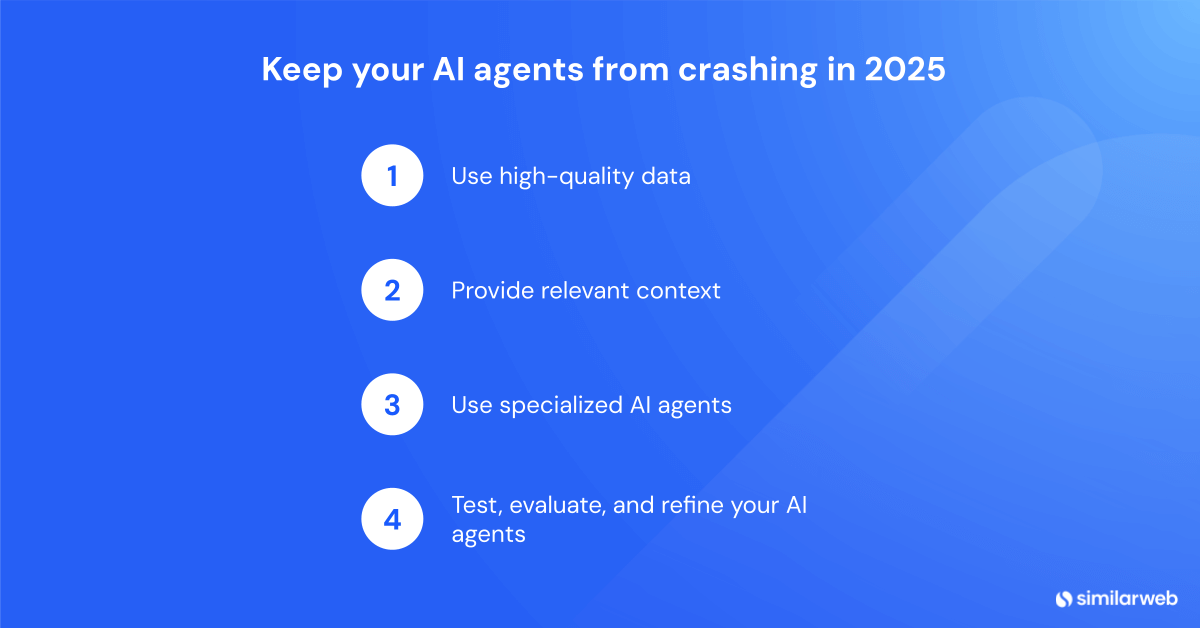

To ensure your AI agents don’t crash in 2025, you need to:

- Use high-quality data: Ongoing investment in data integrity, governance, and structured pipelines is non-negotiable

- Provide relevant context: AI agents are not one-size-fits-all. If you don’t train them on your company’s knowledge, processes, and nuances, you’ll get generic, low-value outputs

- Use specialized AI agents: Just as pilots train to fly specific types of aircraft, your agents should be purpose-built for specific business tasks

- Test, evaluate, and refine: AI agents require constant monitoring, human feedback loops, and performance benchmarks to improve over time

So, the question becomes: Do you want agents that glide along without real impact, or do you want a fleet of high-performing, specialized AI agent copilots that truly drive your business?

Without the right data, your AI agent will never take off. Understanding this today means that you will win in 2025.

FAQs

Why are AI agents at risk of “crashing” in 2025?

AI agents rely on data as their fuel. If that data is incomplete, inaccurate, or inconsistent, agents will make incorrect decisions—sometimes without any obvious warning signs. As AI becomes more embedded in business workflows, bad data will lead to costly mistakes, misinformation, and inefficiencies, causing AI-driven initiatives to fail.

Isn’t AI infrastructure enough to fix bad data?

No. While strong AI infrastructure is important, it cannot correct poor data quality. AI agents need structured, relevant, and real-time data to function effectively. Without it, even the most advanced AI systems will produce unreliable results, no matter how robust their infrastructure is.

How can businesses prevent AI agents from failing?

To avoid AI agent failures, businesses must:

- Ensure high-quality, clean, and well-structured data

- Train agents on company-specific knowledge and real-world context

- Use specialized AI agents for specific tasks instead of relying on generic models

- Continuously test, refine, and monitor AI performance through human feedback and evaluation

By prioritizing these steps, businesses can ensure their AI agents remain reliable, effective, and ready for the future.

How does Data-as-a-Service (DaaS) help prevent AI agent failures?

DaaS provides high-quality, structured, and continuously updated data that AI agents need to operate effectively. Instead of relying on static or outdated information, businesses can leverage DaaS solutions to fuel their AI agents with accurate, real-time insights. This ensures AI-driven decisions are based on the right context, reducing the risk of errors, bias, and misinformation. Investing in a reliable DaaS provider is one of the most effective ways to future-proof AI agents and keep them from “crashing” in 2025.

Maximize your growth potential

Harness the power of data with Similarweb’s APIs to drive smarter business decisions